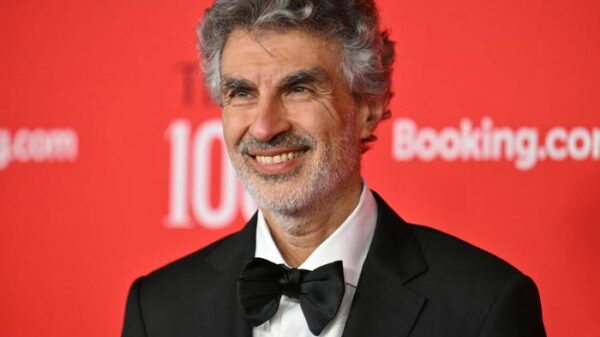

URGENT UPDATE: AI technology is hitting a critical data shortage, reshaping how systems are developed, according to Neema Raphael, data chief at Goldman Sachs. In an eye-opening podcast interview released on October 3, 2023, Raphael warned that the industry has “already run out of data,” forcing developers to rethink their strategies.

This development is significant for the future of artificial intelligence, especially as it coincides with the explosive growth of AI applications since ChatGPT’s debut three years ago. Raphael emphasized that without new data sources, the quality of AI outputs could decline, leading to what he called “AI slop.” The very foundation of innovation in AI is now at stake.

As traditional data sources become exhausted, developers are increasingly turning to synthetic data—artificially generated text, images, and code—to fill the gaps. However, this approach carries risks, including flooding AI models with low-quality information. “The real interesting thing is how previous models will shape the future,” Raphael stated, referencing the controversial development costs of China’s DeepSeek.

Despite the challenges, Raphael remains optimistic about the potential of untapped proprietary data. Businesses possess vast reserves of information that could prove invaluable for enhancing AI capabilities. “There’s still a lot of juice to be squeezed from enterprise data,” he noted, hinting that the next frontier for AI growth may lie within corporate datasets rather than the open internet.

Raphael’s insights reflect a growing consensus in the industry, highlighted by Ilya Sutskever, co-founder of OpenAI, who warned earlier this year at a conference that “all the useful data online had already been used” and predicted that the rapid pace of AI development “will unquestionably end.” This stark reality raises critical questions about the sustainability of AI advancements.

For companies, the challenge now extends beyond merely acquiring more data; it involves ensuring that the data is useful and contextually relevant. Raphael remarked, “The challenge is understanding the data, understanding the business context, and then normalizing it for effective consumption.” This complexity could hinder the integration of AI into business practices if not addressed.

As the conversation around AI continues to evolve, Raphael raised a thought-provoking concern: what if future models rely solely on machine-generated data? “If all of the data is synthetically generated, how much human data can then be incorporated?” he questioned. This philosophical inquiry may shape the trajectory of AI and influence how developers navigate the current landscape.

With the AI industry at a crossroads, the focus now shifts to harnessing proprietary data effectively. As companies like Goldman Sachs explore innovative solutions, the implications of this data crisis will resonate across sectors, impacting everything from client interactions to trading flows.

Stay tuned as this story develops, and consider the profound effects on AI’s future as the industry grapples with these urgent challenges.