In a groundbreaking approach to digital research, the integration of local Large Language Models (LLMs) with NotebookLM has significantly enhanced productivity for users tackling complex projects. This innovative combination allows for the efficient organization of information while maintaining user control over data and context.

Enhancing Research Workflow

Many individuals engaged in extensive research often find traditional methods cumbersome. NotebookLM excels in helping users organize research and generate insights based on specific sources. Yet, for some, the need for speed, privacy, and creative control drives them to explore alternatives. By merging the strengths of NotebookLM with a local LLM, users can experience a transformative boost in their productivity.

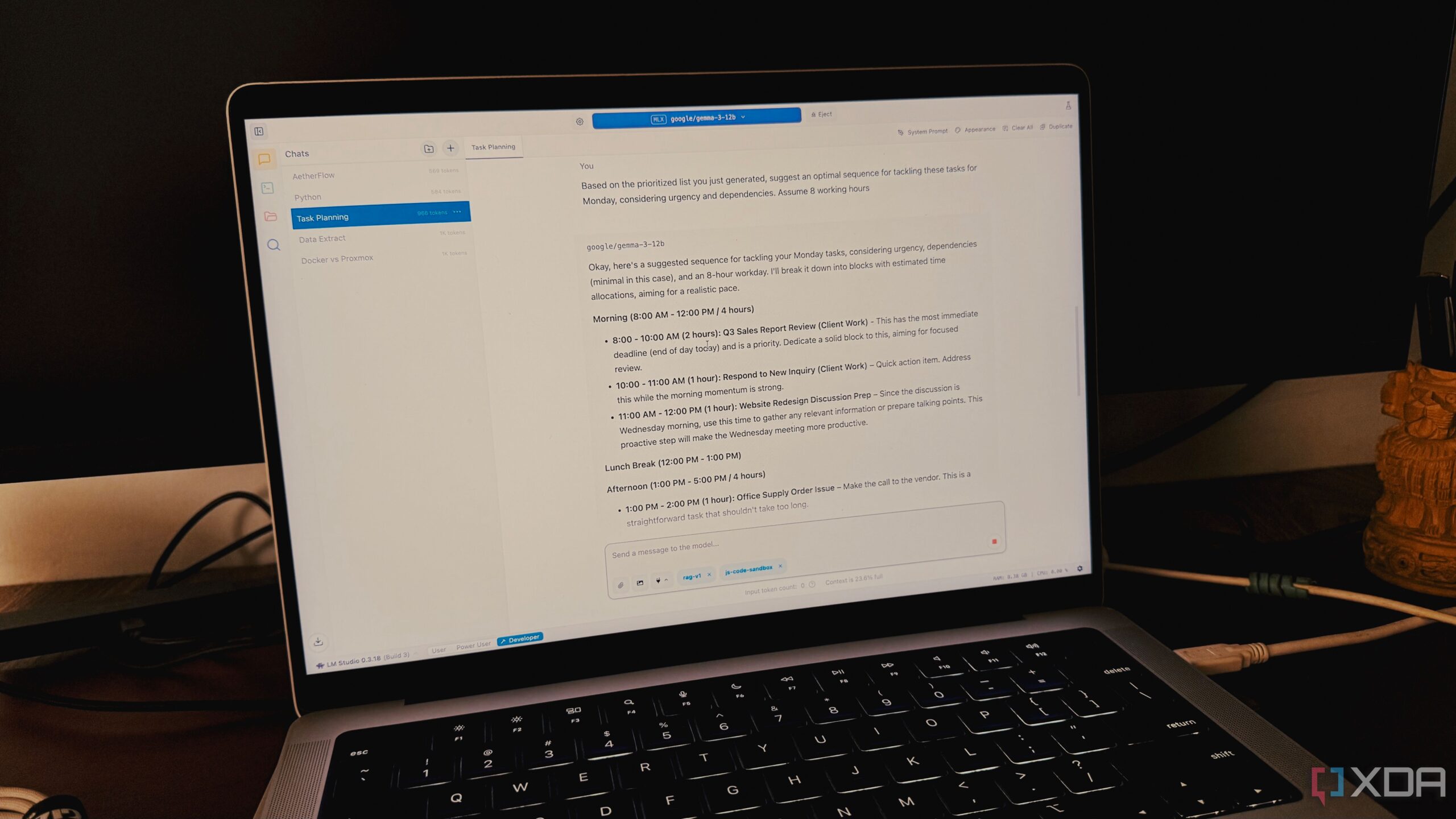

The integration began as an experiment for one user, who sought to optimize their workflow by leveraging the capabilities of both tools. The local LLM, operated through LM Studio and utilizing a 20 billion parameter variant of an OpenAI model, provides rapid responses and facilitates a deeper understanding of complex topics, such as self-hosting applications via Docker.

A Hybrid Approach to Knowledge Acquisition

Initially, the user employs the local LLM to generate a structured overview of the subject matter. For instance, when exploring self-hosting with Docker, they request a comprehensive primer covering essential components, security practices, and networking fundamentals. This approach yields a high-quality knowledge base almost instantly, free of additional costs.

Next, the user copies this overview into a new note within NotebookLM, integrating the local LLM’s structured insights with the extensive source material already available in NotebookLM, including PDFs, YouTube transcripts, and blog posts. By instructing NotebookLM to treat the local LLM’s overview as a source, users can harness the platform’s features to streamline their research.

This method has proven to be remarkably efficient. Following the integration, the user can pose specific questions, such as, “What essential components and tools are necessary for successfully self-hosting applications using Docker?” and receive relevant, contextual answers in a fraction of the time.

Additionally, the audio overview feature has become a significant time-saver. By clicking the Audio Overview button, the user can listen to a personalized summary of their entire research stack, transforming the information into a podcast format that can be consumed away from the desk.

Moreover, the citation and source-checking capabilities of NotebookLM allow for quick validation of information. This feature eliminates the need for extensive manual fact-checking, as users can easily trace the origins of specific insights back to their source material.

Overall, the combination of local LLMs and NotebookLM represents a major shift in research methodologies. The user initially anticipated only marginal improvements, yet the integration has fundamentally altered their approach to deep research. With this hybrid strategy, they have transcended the limitations often associated with either cloud-based or local-only workflows.

For those committed to maximizing productivity while maintaining control over their data, this integration offers a promising new model for modern research environments. As further developments in AI technology continue to emerge, the potential for enhanced efficiency in research workflows appears boundless.

To explore more about how local LLMs can transform your productivity, further insights and detailed examples are available in dedicated posts from industry experts.