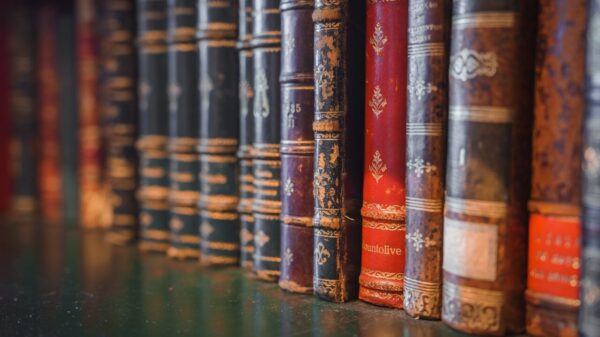

Concerns are mounting as librarians report an increase in reference inquiries about non-existent books and articles, largely driven by artificial intelligence (AI) chatbots like ChatGPT. According to a recent article in *Scientific American*, Sarah Falls, chief of researcher engagement at the Library of Virginia, estimates that approximately 15% of all emailed reference questions they receive stem from these AI-generated requests.

Requests for fake citations are becoming a significant burden for librarians, who are trained to assist the public in locating credible information. Falls noted that many individuals seem to trust the AI-generated results over the expertise of librarians, leading to frustration in their roles. This phenomenon reflects a broader trend where AI is perceived as a more reliable source of information, despite its known limitations.

The issue has garnered attention from various organizations, including the International Committee of the Red Cross (ICRC). In a recent advisory, the ICRC addressed the rise of AI-generated archival references, stating, “If a reference cannot be found, this does not mean that the ICRC is withholding information.” They emphasized that incomplete citations or documents held in other institutions could explain the discrepancies, alongside the growing incidence of AI-generated misinformation.

In 2023, a notable instance highlighted the problem when a freelance writer for the Chicago Sun-Times crafted a summer reading list that included ten fictional titles among the recommended books. Similarly, a report by Robert F. Kennedy Jr.‘s commission revealed that at least seven citations from their initial findings were non-existent. These examples underscore the challenges librarians face in combating the misinformation propagated by AI tools.

While the rise of AI-generated content raises significant concerns, it is essential to note that issues related to fake citations are not new. A 2017 investigation by a professor at Middlesex University revealed that over 400 academic papers cited a non-existent research document, identified as gibberish and likely attributed to a lack of rigor rather than intentional deception.

The growing reliance on AI for information is prompting questions about why users might trust these tools over human experts. One possible explanation lies in the authoritative tone that AI often employs, which can create an illusion of credibility. As people become accustomed to interacting with chatbots, they may develop a sense of trust that overshadows the input from qualified professionals.

Moreover, some users attempt to enhance the reliability of AI outputs through specific prompts, believing that phrases like “don’t hallucinate” might improve the quality of the information generated. If such techniques were effective, they would likely be standard practice in the technology industry.

As librarians navigate the complexities of misinformation fueled by AI, their expertise remains invaluable in guiding the public toward accurate and trustworthy resources. The increasing prevalence of AI-generated falsehoods emphasizes the importance of critical thinking and the need to verify information from credible sources.