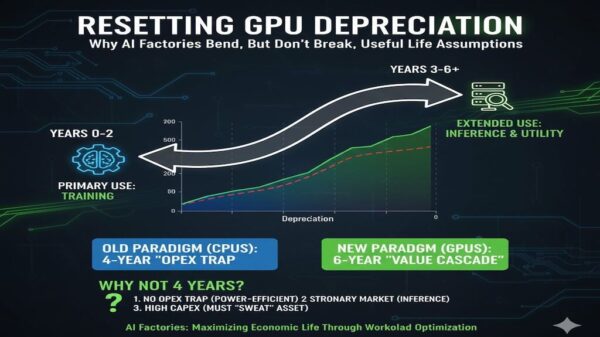

The conversation surrounding the useful life of graphics processing units (GPUs) has intensified, particularly in relation to their role in artificial intelligence (AI). Contrary to the prevailing narrative that suggests GPUs have a limited lifespan, research indicates that these critical components possess a much longer useful life than many industry observers claim. This analysis explores how the depreciation of GPUs is evolving, particularly in the context of AI factories.

Changing Depreciation Models

In January 2020, Amazon adjusted its depreciation schedule for server assets from three years to four years. This decision stemmed from the company’s realization that its servers could operate effectively beyond the initial three-year mark. As the influence of Moore’s Law diminished, Amazon Web Services (AWS) leveraged its large scale to diversify use cases, thereby extending revenue generation from its Elastic Compute Cloud (EC2) assets. This strategic shift prompted other major cloud providers to adopt similar six-year depreciation schedules for their server assets, beginning in the fiscal years of 2023 to 2024.

The question now is whether this trend will persist in the AI factory era. It is anticipated that today’s advanced training infrastructure will continue to support a variety of future use cases, thereby extending the useful life of GPUs beyond their original applications. While innovation cycles from companies like Nvidia may shorten depreciation periods from the current six years to a more cautious five-year timeframe, the overall trend appears to favor longer useful lives.

Comparative Analysis of Depreciation Schedules

Recent research highlights the differences in depreciation schedules among major hyperscalers and AI-native neoclouds. For instance, while AWS, Google Cloud, and Microsoft Azure have settled on a six-year depreciation timeline, AI-focused companies like CoreWeave, Nebius, and Lambda Labs have opted for more conservative estimates of four to five years. This divergence underscores the distinct pressures faced by AI-first clouds, where performance and efficiency gains in GPU generations directly impact competitiveness.

The findings suggest that as neoclouds expand, their shorter depreciation cycles may influence the modeling practices of larger hyperscalers, particularly as accelerated computing consumes an increasing share of capital expenditures (CapEx). This shift reflects a broader recognition that AI applications will require a more dynamic approach to infrastructure management.

The lifecycle of GPUs is also changing. The research indicates that silicon does not become obsolete at the end of its training usefulness; instead, it transitions to less demanding, yet still profitable, tasks. This transition is critical for maximizing revenue in the evolving landscape of AI applications.

The implications of these shifts are significant. A single year’s change in useful life assumptions for trillion-dollar GPU estates can result in variations of operating income by tens of billions of dollars. Nonetheless, in the context of overall operating profits for hyperscalers, these changes may not be as transformative as some media narratives suggest.

Investors are encouraged to focus on operating and free cash flows to gain a clearer understanding of business performance, especially as depreciation cycles continue to evolve. As the AI landscape matures, the proliferation of applications, automation, and fine-tuning processes will further extend the useful life of GPUs, reinforcing the resilience of economic cycles.

Ultimately, research indicates that while the useful life of GPUs may compress slightly due to the rapid pace of innovation, their extended economic utility will prevail. As hyperscalers converge on a five-year depreciation model, the focus will shift towards maximizing the utility of GPUs across training, inference, and internal workloads. This dynamic underscores the importance of maintaining access to essential resources such as compute, land, power, and skilled personnel in the race to build effective AI factories.

As investment in this sector scales into the trillions, cash flow metrics will become critical indicators of performance, reflecting the ongoing evolution of the GPU market and its implications for future valuations.