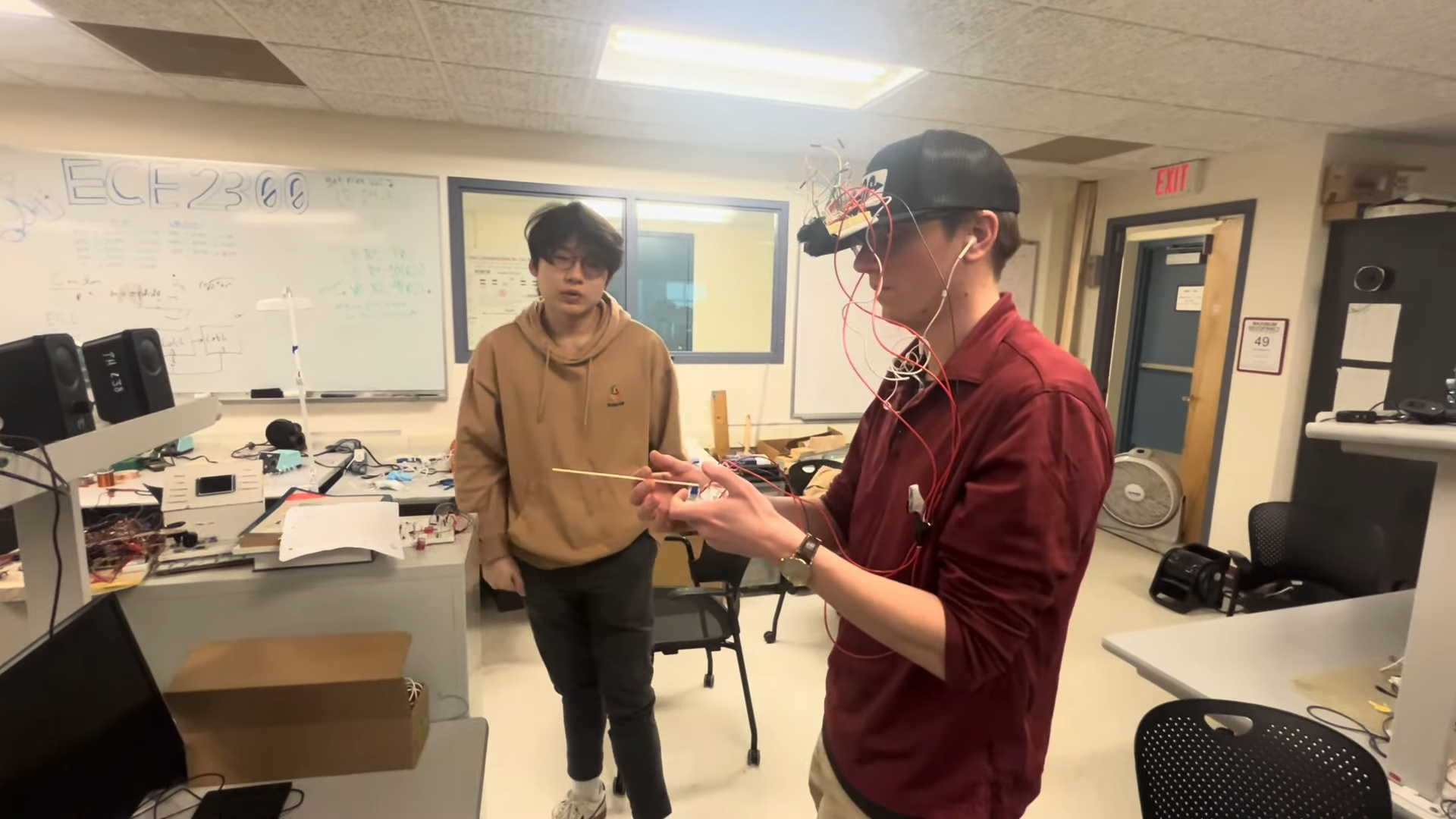

Students from the ECE4760 program at Cornell University have created a groundbreaking spatial audio system integrated into a hat. This innovative project, developed by Anishka Raina, Arnav Shah, and Yoon Kang, allows the wearer to perceive the direction and proximity of nearby objects through audio feedback.

At the core of this project is the Raspberry Pi Pico, which is coupled with a TF-Luna LiDAR sensor. This sensor plays a crucial role in determining the distance to objects surrounding the wearer. Mounted on a hat, it enables the user to scan their immediate environment by moving their head from side to side, detecting obstacles and other items of interest.

The design does not incorporate head tracking; instead, the user employs a potentiometer to signal the microcontroller regarding the direction they are facing while scanning. The Pi Pico processes the LiDAR data, calculating the range and location of nearby objects. It then generates a stereo audio signal that communicates the closeness and relative direction of those objects using a spatial audio technique known as interaural time difference (ITD).

This project exemplifies an exciting intersection of technology and sensory augmentation, enhancing the human auditory system’s capabilities. By providing a new way to interpret spatial information through sound, the hat offers a practical application for individuals who may benefit from enhanced environmental awareness.

The students’ work reflects a growing trend in technology integration into everyday items, showcasing the potential for innovative solutions in personal safety and navigation. Similar projects have surfaced in recent years, highlighting the increasing interest in augmenting human perception through technology.