Researchers at MIT have developed a new assistant called CodeSteer that significantly enhances the problem-solving capabilities of large language models (LLMs) by guiding them in switching between text and code generation. This breakthrough addresses a common limitation of LLMs, which excel at understanding textual content but often struggle with basic mathematical problems and algorithmic tasks. The results indicate that integrating CodeSteer can improve accuracy on symbolic tasks by over 30 percent.

Bridging Text and Code

Large language models are designed primarily for textual reasoning, making them more prone to errors when faced with mathematical queries. For instance, if asked to compare the numbers 9.11 and 9.9, an LLM might incorrectly rely on text-based reasoning rather than executing code. To counter this, CodeSteer, a smaller LLM itself, acts as a coach that directs the larger model on when to apply code effectively.

According to Chuchu Fan, an associate professor of aeronautics and astronautics and principal investigator in the MIT Laboratory for Information and Decision Systems (LIDS), “We want to enable LLMs to select the right tools and methods.” CodeSteer reviews the responses of the larger model, suggesting adjustments until the correct answer is achieved.

The research team, which includes graduate students from LIDS and University of Illinois at Urbana-Champaign, has prepared their findings for presentation at the International Conference on Machine Learning. They found that augmenting an LLM with CodeSteer can enhance performance on complex tasks such as generating robot paths in unpredictable environments and optimizing international supply chains.

Methodology and Results

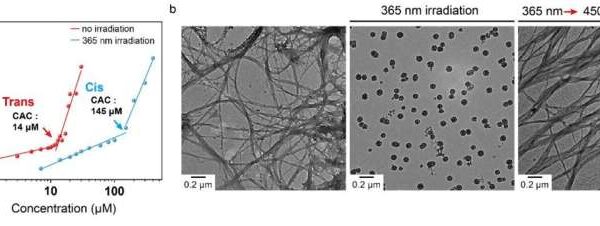

Research has shown that LLMs often attempt to generate simpler, less effective code when faced with symbolic calculations. CodeSteer tackles this issue by prompting the model to use more complex coding methods, ensuring that the generated code effectively addresses the task at hand. The team developed a dataset named SymBench, which includes 37 complex symbolic tasks, to test their methods.

In experiments, CodeSteer outperformed nine baseline methods, increasing average accuracy from 53.3 percent to 86.4 percent, and maintained high performance even on previously unseen tasks. This innovation allows a general-purpose model equipped with CodeSteer to achieve better accuracy than state-of-the-art models designed specifically for complex reasoning.

“By augmenting an LLM with the ability to smartly use coding, we can take a model that is already very strong and improve its performance even more,” said Yongchao Chen, a graduate student involved in the study.

The research has received support from the U.S. Office of Naval Research and the MIT-IBM Watson AI Lab, highlighting its potential impact on various applications where LLMs currently fall short.

Experts in the field have praised the approach, with Jinsung Yoon, a staff research scientist at Google Cloud AI, noting that the method enables LLMs to achieve significant performance improvements without requiring direct fine-tuning. “This research represents a substantial contribution that promises to significantly enhance the application of LLMs to a diverse range of tasks,” Yoon added.

As the team continues to refine CodeSteer, they aim to streamline its prompting process and explore the possibility of developing a unified model that seamlessly integrates both textual reasoning and code generation capabilities. This research could pave the way for more robust AI applications in complex real-world scenarios, marking a significant step forward in the evolution of large language models.