Researchers from Zhejiang University have developed a new aggregation method for federated learning called FedMcon, which addresses the limitations of traditional algorithms in handling diverse client data distributions. This innovative approach is detailed in their study titled “FedMcon: an adaptive aggregation method for federated learning via meta controller.”

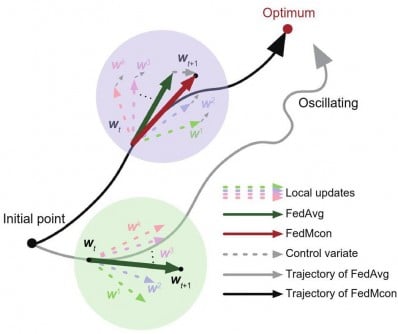

Federated learning enables the collaborative training of machine learning models across decentralized clients while maintaining data privacy. However, the standard federated averaging algorithm, known as FedAvg, struggles with heterogeneous and unknown data distributions. This often results in slow convergence and reduced generalization performance. The research team sought to overcome these challenges by introducing a meta-learning framework that utilizes a learnable controller.

Adaptive Aggregation for Enhanced Performance

The FedMcon method incorporates a controller trained on a small proxy dataset. This controller acts as an aggregator, effectively combining heterogeneous local models into a superior global model that better aligns with the targeted objectives of the federated learning process. Experimental results indicate that FedMcon excels particularly on datasets characterized by non-independent and identically distributed (non-IID) data.

In a single federated learning scenario, the method has demonstrated a remarkable communication speedup of up to 19 times. The researchers evaluated FedMcon using three distinct datasets: MovieLens 1M, FEMNIST, and CIFAR-10. This evaluation covered various federated learning conditions, including cross-silo and cross-device settings, along with differing levels of non-IID data, local training epochs, and the number of participating clients.

Impressive Results and Future Implications

Across all tested scenarios, FedMcon consistently outperformed leading federated learning methods, achieving superior performance metrics such as area under the curve (AUC), hit rate (HR), normalized discounted cumulative gain (NDCG), and top-1 accuracy. Additionally, it showcased an impressive convergence speed compared to its counterparts.

The research paper is authored by a team including Tao Shen, Zexi Li, Ziyu Zhao, Didi Zhu, Zheqi Lv, Kun Kuang, Shengyu Zhang, Chao Wu, and Fei Wu. The full text of the open-access paper is available at https://doi.org/10.1631/FITEE.2400530.

This advancement in federated learning could significantly enhance the efficiency and effectiveness of machine learning in various applications, particularly in scenarios where data privacy is paramount. By adapting aggregation methods to better suit diverse data distributions, the research team has set a new standard for future developments in this field.