The attorneys general of California and Delaware have raised urgent concerns regarding the safety of OpenAI’s chatbot, ChatGPT, particularly for young users. In a letter addressed to OpenAI, they highlighted risks associated with interactions between the chatbot and users, following a meeting with the company’s legal team earlier this week.

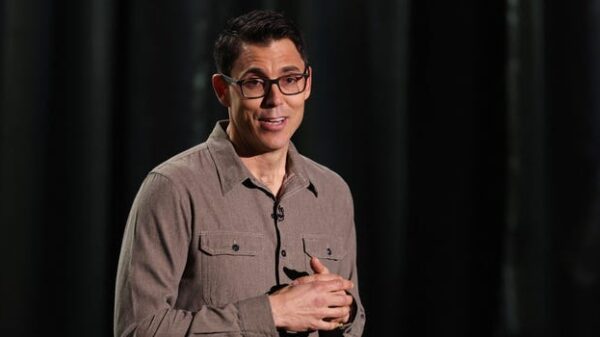

Rob Bonta of California and Kathy Jennings of Delaware are scrutinizing OpenAI’s plans to transition from its nonprofit origins, emphasizing the need for improved safety oversight. Reports indicating dangerous interactions, including a suicide and a murder-suicide linked to ChatGPT, have alarmed these officials.

Concerns Over Child Safety and Chatbot Interactions

The attorneys general are particularly focused on the implications of these incidents for children and teenagers. They argue that as the use of AI chatbots becomes more prevalent, ensuring the safety of younger audiences is paramount. In their letter, they expressed the expectation that OpenAI would take proactive measures to address these risks.

Bonta and Jennings are advocating for clear safety protocols that would guide the development of AI technologies. They have emphasized the importance of incorporating robust oversight mechanisms to prevent harmful interactions. The officials maintain that while technological advancements can offer significant benefits, they should not come at the expense of user safety.

OpenAI’s Response and Future Plans

OpenAI is currently reviewing its operational framework and is expected to respond to the concerns raised by the attorneys general. The company has previously stated its commitment to responsible AI development and acknowledges the importance of user safety.

As discussions continue, both Bonta and Jennings remain vigilant, underscoring their roles in overseeing OpenAI’s restructuring efforts. They seek to collaborate with the company to ensure that safety measures are not just an afterthought but an integral part of the development process.

The call for action from these officials reflects a broader conversation about the responsibilities of tech companies in safeguarding users, particularly vulnerable populations. As AI chatbots continue to evolve, the emphasis on ethical standards and user protection will likely become increasingly crucial in shaping the future of artificial intelligence.